Transformer-Based Chinese Characters Image Generation

Conducted in NEXT Lab, font generation is often time-consuming, especially for glyph-rich languages like Chinese. To address this, we developed a one-shot font generation model that can synthesize high-quality glyphs from only a single reference character. At the core of this approach is:

- A novel mixer architecture composed of sequential channel and spatial attention modules

- The channel attention identifies what features to extract

- The spatial attention learns where to align those features, allowing for robust style-content disentanglement and spatial correspondence learning.

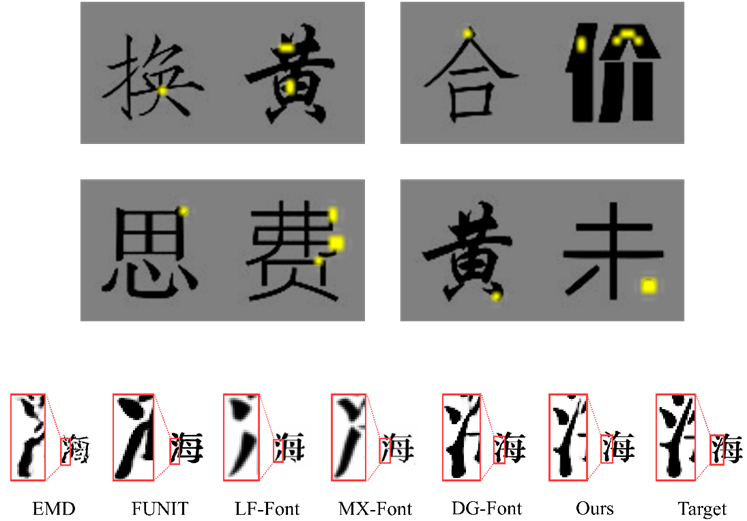

The model performs well on benchmark datasets, validated by both quantitative results and user studies. Visualizations further confirm the effectiveness of the proposed spatial attention mechanism in capturing fine-grained structural alignments.

My primary contributions include:

- Designing the design and implementation of the spatial cross-attention module, enabling long-range pixel-level interactions between style and content features

- Implementing the overall model architecture

- Leading multiple ablation studies to evaluate the effectiveness of different attention strategies